Very long chat sessions can confuse Bing, says Microsoft

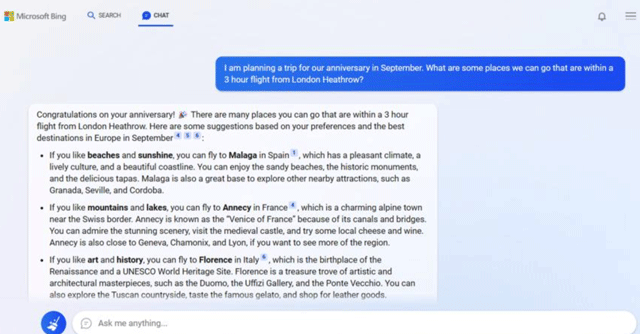

Extended chat sessions of 15 questions or longer can “confuse the model on what questions it is answering”, Microsoft said in a blog post on Wednesday about its new ChatGPT-powered conversational search platform, Bing. The post comes after users revealed how Bing produced misinformation, as well as offering rude and insinuating responses to user queries.

In the blog post, Microsoft detailed that it would be quadrupling the ‘grounding data’ behind Bing in order to help the platform produce accurate results from recent affairs, such as financial figures of public companies around the world, as well as live sports scores of ongoing tournaments. Bing, as reported by Dmitri Brereton, an independent US-based AI and search engine expert, produced wrong financial figures of publicly listed clothing brand, Gap, during its early demonstrations.

The company also added that it is “looking at” how to give users “more fine-tuned control”, in order to ensure that Bing avoids producing rude responses that could be offensive to users. The company claimed that responses from Bing in such tonality is generated only from queries that are in a similar tone. It also added that the platform will soon get a tool to reset the context of a search, in order to prevent Bing’s responses from becoming repetitive.

The post comes a week after the company unveiled its new conversational interface integrated with its search engine, Bing — with OpenAI’s generative conversational artificial intelligence (AI) tool, ChatGPT, being one of the key platforms powering it. Microsoft launched the ChatGPT-powered Bing search tool a day after fellow big tech firm, Google, launched its own AI-powered search platform, called Bard, which used the company’s own large language model to process and understand text queries — Language Model for Dialogue Applications (LaMDA).

Large language models, such as OpenAI’s Generative Pre-trained Transformer (GPT) or Google’s LaMDA, use vast troves of data in their background in order to understand questions written in plain text, and generate a response basis this. Microsoft’s own Bing, while using ChatGPT, uses other enhancements to the model, as well as being connected to the internet, to create its own model, which the platform calls Prometheus.

Users have since criticised both Microsoft and Google, calling their rollouts hasty and unprepared. Google’s Bard, for instance, led to the company losing $100 billion in market value a day after being initially demonstrated, due to erroneous responses that it generated to user queries.

However, it’s not just Bing that has made errors in responses. For instance, user reports highlighted how OpenAI’s ChatGPT also often made simple mistakes, such as being unable to solve simple logical reasoning questions, failing to state whether Abraham Lincoln's assassination happened in the same continent as where he was present, and also producing misinformation regarding cultural history in the US, among others.