Meta announces new chip for training and running AI models

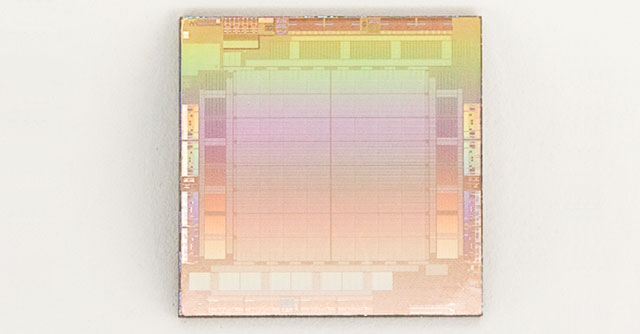

Meta has announced a new chip for training and running artificial intelligence models. Called the Meta Training and Inference Accelerator, or MTIA for short, it is an application-specific integrated circuit (ASIC). An ASIC chip combines different circuits on one board and can carry out multiple tasks parallelly.

Meta designed the first generation MTIA ASIC in 2020 and has been it for its internal workloads. MTIA has an internal memory of 128 MB and can scale up to 128GB, the company claims. Further, the chip offers greater compute power and efficiency compared to CPUs and is customised for Meta’s internal workloads, said Santosh Janardhan, vice president and head of infrastructure in a blog. “By deploying both MTIA chips and GPUs, we’ll deliver better performance, decreased latency, and greater efficiency for each workload,” he added.

That said, Meta said that the chip needs improvement, especially in memory and networking aspects, which may pose bottlenecks as AI models increase inzsize. Currently, MTIA’s focus is on inference for recommendation workloads across Meta’s apps. As per the TechCrunch report, the chip is slated to come out by 2025.

Meta joins the likes of Microsoft and Google which are building their own custom AI chips. As per a recent Bloomberg report, Microsoft is working on an in-house AI chip in partnership with chipmaker AMD. Google, too, in 2022, launched its fourth-generation tensor processing unit (TPUv4) for machine learning tasks.

Apart from MTIA ASIC, Meta also detailed about Research SuperCluster (RSC) AI Supercomputer, which was first introduced in 2022. The company claims it to be one of the fastest AI supercomputers in the world. It can train the next generation of large AI models which form the base for new augmented reality tools, content understanding systems, real-time translation technology and others.

Additionally, Meta has also launched its next-gen data center to support AI hardware for training and inference. “This new data center will be an AI-optimized design, supporting liquid-cooled AI hardware and a high-performance AI network connecting thousands of AI chips together for data center-scale AI training clusters,” Janardhan said in Meta’s blog.

As per Meta, these new announcements are in line with its ‘ambitious plan’ to build its infrastructure backbone suited for AI technology.