MeitY's advisory on AI models -- What's at stake

On March 1, the Minister of Electronics and Information Technology (MeitY) issued a late night advisory on the deployment of artificial intelligence (AI) models, large language models (LLMs), and AI algorithms. The notice asks social intermediaries and platforms to get an approval for their under-testing or unreliable models from the government before making it available on the internet to Indian users. The notice doesn’t explicitly define unreliable models.

The advisory seeks that these platforms host a consent popup mechanism to apprise users of the ‘possible and inherent infalliability or unreliability’ of models’ output. The ministry has asked the intermediaries to submit action taken-cum-status report within 15 days of the advisory issuance.

In this regard, MeitY has cited the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules 2021, further stating that it is an extension of the December 23 advisory on misinformation dissemination through deepfakes.

What led to this advisory

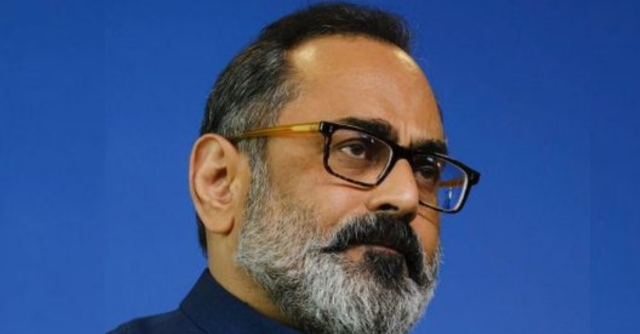

The advisory was issued after Google LLM model’s output provoctory remark on Prime Minister Narendra Modi. IT minister Rajeev Chandrasekhar, at the time, said, “These are direct violations of Rule 3(1)(b) of Intermediary Rules (IT rules) of the IT act and violations of several provisions of the Criminal code.” He also added that Digital Nagriks (digital citizens) are not be experimented on with unreliable models and algorithms.

Later, in an interview with The Times of India, Chandrasekhar said that the government sent a notice to Google, to which the tech giant responded with an apology, saying that the platform is unreliable. To be sure, last month Google temporarily halted the image generation feature Gemini after it was found to generate inaccurate results and display ‘pro-diversity’ bias.

“The emphasis here (the advisory) seems to be that proper stress testing ought to be conducted on the AI models and algorithms, so that they are reliable and do not create any bias. The interpretation is subjective and the government has to be satisfied that the testing was sufficient for the model/algorithm to go to market. They have made it an ex-ante obligation,” said Shreya Suri, partner with the TMT practice of IndusLaw. “Algorithmic bias can be common, depending on what kind of data the algorithm is trained on. That said, rulemaking through advisories is not something which is common in this industry, but it seems to be happening over the last two to three months.”

Concerns around the advisory

The industry was quick to react to this advisory. Many opine that such obligations may stifle innovation, especially when the industry is still in the developing stage. In response to a part of concerns, Minister Chandrasekhar said in a tweet that it is not applicable to startups, but is aimed at ‘significant’ and large platforms. “Process of seeking permission , labelling & consent based disclosure to user abt untested platforms is insurance policy to platforms who can otherwise be sued by consumers,” he added.

“Regulation in an industry which is very fastly growing has to be clear, precise and light handed. And the anytime we will come up with heavy handed regulation, we are going to see interference in the development of an industry. Having said that, this technology is now pretty much changing the ecosystem in a very different way, so we cannot just rely on self regulation by companies. However, it (government’s regulation) cannot be issued ad hoc,” said Mishi Chaudhary, technology lawyer and the founder of Software Freedom Law Centre, India.