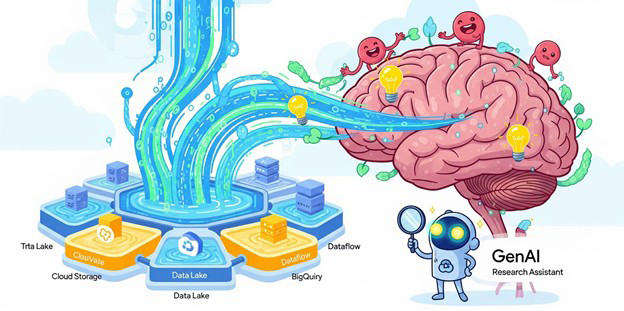

How I Turned a GCP Data Lake into a Brain for a GenAI Research Assistant

In the last few years, generative AI has moved from novelty to necessity for research workflows. But if there’s one thing I’ve learned while building AI-powered assistants, it’s this: an LLM without domain context is like a highly educated amnesiac—eloquent but clueless about your actual data.

This blog describes how I upgraded an already existing Google Cloud Platform (GCP) data lake to use it as a knowledge backbone of a GenAI-driven research assistant. The trip entailed closing the divide between structured and unstructured data, creating imbeddings, embarking on retrieval-augmented generation (RAG) and bringing it all together in a vector database. In the process I have run into a couple of gotchas that should be noted by those doing the same.

Step 1: Understanding the Data Lake Starting Point

I began with a powerful GCP data lake with GCS as raw and curated dataset with bigquery as large scale queries and Pub/Sub with Dataflow as the streaming ingestion and Data Catalog as metadata. Although it contained millions of papers, datasets and reports, it represented a silent library in terms of GenAI. My objective was to allow the LLM to access and connect the necessary data in real time, allowing static data to finally become dynamic information that enables any user to make quicker and smarter decisions.

Step 2: Generating Embeddings

Embeddings connect the gap between human language and machine interpretation by converting text into dense vectors that incorporate semantic meaning in a way so that such phrases as machine learning model evaluation and AI model benchmarking end up being nearby neighbors in the vector space. I ran my PDFs, CSV and database contents through Cloud Functions and Apache Beam and calculated the embeddings with Vertex AI API (or all-MiniLM-L6-v2 to run the embeddings offline), and saved each vector with metadata to track the whole process. I am doing this so that the LLM can have a semantic map of the data to be able to retrieve precision and contextual information. This is one of the most important first findings: chunk size matters- I put it at 500-750 tokens, and the overlap at 50 tokens which has worked best with my file.

Step 3: Choosing a Vector Database

Embedding storage and retrieval was the most important one. I pitted Pinecone (flexible API, somewhat scalable, not part of GCP), Weaviate (flexible schema, hybrid search), and Vertex AI Matching Engine. I have selected the Matching Engine due to its low latency, GCS/BigQuery integration, and security built-in with GCP. Important lesson learned: the idea of a vector database indexing is not universal, and the tuning of clusters and probes played a very important role in balancing between accuracy and speed of retrievals.

Step 4: Building the RAG Pipeline

Having the embeddings prepared, one step was the creation of a retrieval-augmented generation (RAG) pipeline. There was no real complexity to the flow: translate the user query into an embedding, search using a vector search to obtain the top-k of most similar chunks, concatenate them onto the LLM prompt, and pass the enhanced prompt to the Vertex AI PaLM API to get a contextually sensitive response. Security was the tricky bit, prompt injection attacks can bypass rules or log the raw data, so placed the additional level of sanitization to strip malicious instructions and forced role-based access ensuring that users saw only what they were allowed.

Step 5: Migrating and Modernizing the Data Lake

The fact that the data lake was accumulated over years meant that it had a “data debt” made up of inconsistent formats, metadata, and files with incorrect labels. I modernized it before connecting it to the GenAI pipeline: enriching metadata through the use of Cloud DLP to classify sensitive data, standardizing on CSV/JSON/Parquet schema, and running a Cloud Composer DAG to indenture only new or changed files. Important lesson: legacy metadata is unreliable- approximately 30 percent of Data Catalog tags did not match file contents so this had the potential of producing critical document gaps in the embedding pipeline.

Step 6: Performance Tuning

After establishing a pipeline, I would speed and tune the pipeline. I warmed up embedding and search APIs via dummy queries every ten seconds to prevent cold start latency, and boosted top- k at 5 to 8 to increase top-k accuracy by 11%, with no additional latency overhead, and introduced Memorystore (Redis) caching of frequently occurring queries, reducing frequent lookup times by milliseconds.

Final Thoughts

Key lessons?

Clean up or modernize first before integrating since well aligned data eases everything down the road. It is a good idea to tune your chunk sizes to get improved retrieval, lock up your RAG layer to avoid leaks and continue to monitor as data expands.

A GenAI assistant will never be better than the brain that you provide him with. So in a sense, I made my GCP data lake complete by vectorizing it and turning it into a true knowledge engine-so the LLM was not only eloquent but truly informed.

No Techcircle journalist was involved in the creation/production of this content.